Vector DBs Will Not Save Your RAG

- Diogo Gonçalves

- Jan 6

- 5 min read

The AI world has collectively fixated on Vector Databases as the holy grail for scalable, accurate information retrieval and synthesis to solve RAG (Retrieval Augmented Generation) applications. The prevailing narrative suggests that once your corpus grows beyond a few documents, you must rely on vector databases and Approximate Nearest Neighbor (ANN) indexes. After all, how else can you handle massive data sets at scale?

But here’s a provocative counterpoint: recent research—including the paper [Can Long-Context Language Models Subsume Retrieval, RAG, SQL, and More?]—calls this assumption into question. The findings hint that the role of retrieval might be much simpler (and cheaper) than we’ve been led to believe. In other words, you might be spending a fortune on vector DB infrastructures and ANN indexes that don’t actually solve your underlying problem—and might not even be necessary as language models evolve.

More often than not, the problem in your RAG application is the “Retrieval” quality and adequacy to the Large Language Model you are feeding the information into.

As more organizations experiment with RAG systems, a recurring challenge has emerged: the details matter—sometimes more than anyone anticipates. Many RAG implementations fail not because of flawed high-level concepts and architecture, but due to subtle missteps in how data is retrieved, stored, and presented to the model. LLM models are still quite sensitive to prompt design and the formatting of retrieved information. What works seamlessly for one model might not translate to another, making it all too easy to introduce hidden pitfalls that degrade performance. Simply pulling documents from a database and stuffing them into a prompt often isn’t enough; small mistakes in formatting, ordering, or content segmentation can mean the difference between a brilliant answer and an incoherent one.

Countless POCs were undertaken as teams rushed to wrap their heads around these new technologies. Many of these projects stumbled over the same hurdles: underestimating how “idiosyncratic” model behaviors can be, overestimating the reliability of current LLMs, and failing to provide consistent structure for prompts and data presentation. The upshot? A wave of repeat failures that, in hindsight, were entirely preventable. Going forward, two paths are becoming clear: either we push for more robust models that can handle a variety of data presentation styles, or we place more structured constraints on how developers feed information into these models. Well-defined APIs, stricter formatting protocols, and more rigorous usage guidelines can drastically reduce failure rates and help teams build truly reliable RAG systems.

The Myth: Global ANN Indexes Everywhere

When building an AI assistant or RAG system, there’s a knee-jerk reaction: “We have to search every user’s data globally using an ANN index!” But the very acronym “ANN”—Approximate Nearest Neighbor—should give you pause. Approximation in scenarios where exact recall matters (like verifying crucial documents) is a dangerous compromise. Worse yet, maintaining these global ANN indexes balloons costs:

Memory Overload: Keeping massive vector stores in memory.

Infrastructure Costs: More compute, more nodes, more money.

Slow Writes: Indexing can crawl along in megabytes per second, versus the gigabytes-per-second throughput you can achieve with streaming.

Missed Results: By definition, approximation risks missing critical data points.

Even if your application truly needs vector-based retrieval, you probably don’t need a global index. Your data likely has natural partitions—by user, organization, department, or project. If you’re not handling thousands of queries per second per partition, you’re over-engineering.

The New Perspective: Larger Context Windows, Simpler Retrieval, and System Prompt Caching

The paper “Can Long-Context Language Models Subsume Retrieval, RAG, SQL, and More?” suggests that as models gain the ability to handle larger contexts directly, the reliance on external retrieval layers might diminish. Instead of playing architectural Tetris with a vector DB and global indexes, you could:

Use Simpler Solutions: Stream your data from disk, partitioned naturally, and feed it directly to models with large context windows. The need for intricate retrieval pipelines and approximations fades as models can ingest more raw data at once.

Maintain Exactness: Avoid approximation pitfalls entirely. No missed results, no compromised quality. The model can reason directly over a comprehensive context, removing the uncertainty introduced by lossy retrieval mechanisms.

Reduce Costs: No monstrous memory requirements or expensive indexing steps. By trusting the model’s extended context window, you reduce the complexity and overhead involved in constantly searching and stitching together relevant snippets.

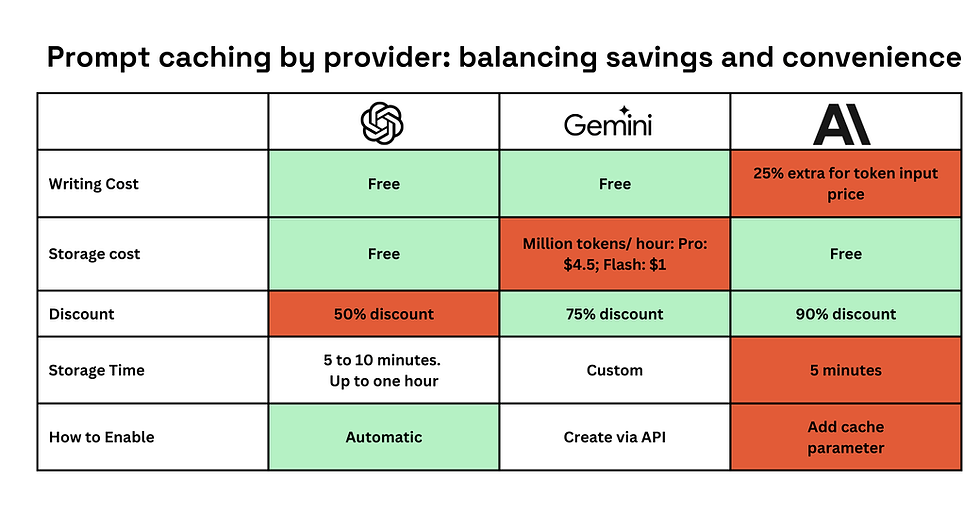

Beyond just extended context windows, recent innovations in context caching—particularly around system prompts—offer another pathway to efficiency. Providers like OpenAI, Anthropic, and Google’s Gemini are exploring ways to cache stable pieces of instruction or background information so that they don’t need to be re-transmitted and re-processed for every single query.

Source: https://www.tensorops.ai/post/comparing-context-caching-in-llms-openai-vs-anthropic-vs-google-gemini

This technique, known as system prompt caching, can help:

Eliminate Redundant Token Costs: Complex instructions, policies, or guidelines that remain constant over multiple requests can be cached, meaning you pay for their inclusion once and not repeatedly. This cuts down on token usage and overall inference cost.

Accelerate Iteration: With the system prompt already “on hand,” subsequent queries or refinements start from a shared, understood baseline. You avoid the friction of repeatedly re-establishing context, allowing for faster iterative development and experimentation.

Simplify Your Infrastructure: By leveraging a model’s built-in capacity to “remember” system instructions through caching, you rely less on external tools or intermediate orchestration steps. Just as large context windows reduce the need for external retrieval systems, system prompt caching reduces the need for complex prompt management solutions.

In a world where language models continue to stretch their context boundaries and develop smarter ways of reusing stable context, the fundamental value proposition of complex ANN solutions, heavy retrieval architectures, and repeated prompt overhead starts to erode. The future points towards a leaner, more direct interplay with large language models—one where scaling context and caching system prompts converge to simplify workflows and streamline costs.

A Practical Guide: Corpora Size vs. Retrieval Solutions

You might still wonder: “But what about scaling?” Let’s lay it out clearly. Different solutions fit different corpus sizes and complexity levels. As language models grow more capable, you may find that you need less machinery than you thought.

The Bigger Picture

As large language models improve, the premise that we need global indexing and fancy ANN tricks starts to crumble. Think beyond the status quo. If your data is naturally partitioned, and you don’t need split-second responses across millions of documents, the simplest solution might be the best:

No Approximation: Don’t lose crucial matches.

Lower Costs: No need for massive infrastructure investments.

Faster Writes: Keep your system agile by streaming data directly.

Greater Flexibility: Easily combine with full-text search, substring matches, and other filters without complex integrations.

Key Insight: You can’t rely on vector DBs alone to “save” your RAG approach—especially as new research signals a future where long-context LLMs reduce the need for complex retrieval layers.

Key Takeaways

Before you commit to an expensive, complex vector database with ANN indexing, consider the evolving capabilities of long-context models and simpler retrieval solutions. Does your data have natural partitions? Are you genuinely hitting a query-per-second ceiling that justifies complexity? Is approximation acceptable in your use case?

In many situations, streaming and partitioning is cheaper, simpler, and safer. As the paper suggests, the future might favor larger context models that make these elaborate retrieval schemes obsolete—or at least render them optional rather than mandatory.

Don’t let the hype convince you that vector DBs and ANN indexes are your only path. They might not save your RAG. In fact, as research and model capabilities evolve, you might not need a very complex architecture at all.

Comments